If you’ve been in the software industry long enough, you’ve heard the term portable bantered about as an ephemeral goal for all things constructed from zeroes and ones. Applications and services are primarily the focus of these discussions, but often one crucial component is overlooked.

It originally meant cross-platform via ANSI/ISO standard languages, interpreted languages, or bytecode+VM solutions. These products allowed the “write once, run anywhere” philosophy to become pervasive. Application frameworks, MVCs, and sundry and assorted design patterns have further abstracted software to make it more flexible, modular, and portable across different hardware and OS platforms.

The next wave came with things like XML, SOAP+WSDL, REST, WebSockets, and JSON along with several others that fell by the wayside. The goal of these standards (reminds me of a quote) was to make communications between applications transparent and seamless, using platform-independent and well-defined protocols and formats to define the interfaces between different services. RFCs, W3C/IETF recommendations/drafts/standards, and industry consortiums struggled with a consensus on each, and for the most part interoperability works with typically well-documented exceptions. API services like Mashape and Mashery exist to help provide interfaces into legacy systems and coach users on how to integrate services into their solutions. It seems like this is a solved problem as well.

So what else is left to make things truly portable? An application without data is pretty much useless. Well, all the data these cross-platform, interoperable applications produce and/or consume has to have a home somewhere. Got a traditional RDBMS like MySQL, Maria, Postgres, Oracle, MSSQL solution spun up? Or maybe you’re the cool kid on the block using a NoSQL solution like REDIS, Mongo, Genie, Couch, or Cassandra that can scale up in the cloud for your coffeehouse startup that will be worth $100M in three years? (Tongue in cheek, folks – calm down.) Regardless of where you store it, there are a few things you need to be mindful of.

Data is forever.

Rosetta Stone (© Hans Hillewaert / )

Sure, floppies could be wiped with a magnet. CD/DVDs can be scratched or broken. Your NAS can be hit by lightning, or your datacenter could get knocked offline by a storm. Storage media is imperfect and can be physically destroyed. However, data is just zeroes and ones that live somewhere. This means that as long as there is a copy on some media, and something can read that media, data is for all practical purposes immortal.

Keeping that in mind – how would your data look to someone in three years? How about five? What about 10-20 years from now? This might sound a bit preposterous, but consider that we are collectively recording data at a faster rate than we can process it, and that acquisition rate is only increasing. At present, the best data scientists in the industry are skimming a lot of this data, picking out the high points and outliers. They are even exploring ways to use machine-learning algorithms to make extrapolations based on subsets of data. At some point when computing power catches up, there will be more in-depth analysis of this so-called dark data looking for trends and leading indicators of what is to come. We’re already seeing this with legacy mainframe datastores being migrated into contemporary storage systems for analysis using tools that were a pipe dream 10-20 years ago.

That being said, do you know where your data will wind up in the coming years, or who might want to dig through it? What kind of data will you have waiting for the data scientist who hasn’t even been born yet? Or even the machine learning system that is trying to make sense of it all? And what kind of structure would make it comprehensible to that future researcher? When structuring data, keep in mind that data is forever, and you never know who – or what – might be consuming it down the line.

Programming languages are not.

Sure, you can still find old F77 compilers if you dig around hard enough, but odds are you would rather not. The data-science-focused language R is the current standard in manipulating large amounts of numeric data and running statistical analysis in the industry, and Python is the new hotness, quickly gaining on it. These are just the players from a few generations optimized for analyzing large amounts of data, and by no means the only things interacting with your datastore.

You might have a website built in a battle-tested language like PHP, Ruby or Java that is currently manipulating data in your backend. But what is going to be talking to your servers in the coming years, and what language is it going to be built in? NodeJS, Python, Haskell, and Elixir are all up-and-comers, and all treat data slightly differently internally even though they support most common data standards for interoperability.

Why does the language matter? The data lives in external storage, right? True, but what kinds of data do you have stored? Bitmasks were pretty common in compiled languages, as were fixed-byte-length packed binary fields. Languages like PHP and NodeJS provide serialization mechanisms for complex data structures into – you guessed it – native formats. Check your favorite CMS or application framework database system tables, or just execute a query against a MongoDB or Postgres server where subfielding is enabled. I dare you. I watched a colleague almost rip all his hair out trying to get a custom MongoDB record type to migrate into Postgres for a Rails app. And that was using the same language and framework.

The lesson? When you externalize data, make sure it’s in something that uses a general enough format that applications written in the future can read them. Avoid complex (and often undocumented) proprietary binary data, try to use data standards where available (ISO is a great source for this), and for the love of the Zero One, don’t bury language-specific constructs in your datastore.

Architecture evolves.

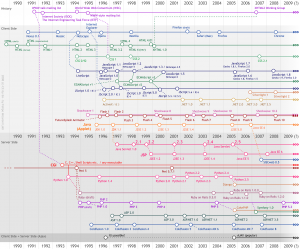

Website development timeline (© Felipe Micaroni Lalli / CC-By-SA)

Your favorite stack will change over time. That hot new stack you are building apps, services and/or web sites with now isn’t going to be what you’ll be using in 5-10 years, I can pretty much guarantee it. LAMP was all the rage 10 years ago, and is now in steady decline. MEAN is the current shiny new thing, but it too will eventually dull when something new comes onto the scene that addresses a future challenge in a better, simpler way. Likewise, organizations tend to switch solutions as required by law, shareholders, stakeholders, or accountants, which doesn’t always coincide with trends in the technical industry. Which means the data in a 200-table CMS database with no relational keys is someday going to have to be pulled into something else.

I’m often asked about migration solutions for Drupal, as it’s a system I work with pretty much daily. For those not familiar, it is an open source, community driven, LAMP based CMS that has seen success in both private and public sectors. In a search for a faster tool to use for large migrations, I began investigating Elixir as it’s streamlined for pattern matching and parallelization which could provide a huge boost to content migrations into or out of Drupal systems. My first step was to try to programmatically extract the complex database structure employed by Drupal 7 via Elixir so I could expose it to the Ecto library for that language. As I walked the code for content loads and saves, imagine my surprise to find that all relational information and logic resides in the code and not in the database. To make matters worse, individual content item storage can be overridden by their implementations, so there is no guarantee that everything you need is going to even be in one place, or use a standard storage mechanism or format. Compounding the issue, many complex content items store serialized PHP data as blobs in the designated storage system (see the previous section for why this is a bad idea). Due to the architecture and assumptions made by the developers of Drupal core, we now have what is effectively vendor lock-in with an open source system as well as stack lock-in with language specific data and MySQL structure assumptions. Say whaaaat?

The takeaway from this exercise is that no matter what stack is being leveraged, be mindful of the fact that you can’t assume that your data is never going to be accessed by something outside that architecture. Also be mindful of the fact that at some point in time your data is going to have to be migrated out of one architecture and into another, and the more stack-dependent your data is, the harder this job is going to be. And you might be the lucky (?) one who gets to do it. So wherever possible within the confines of your architecture, try to make your data as agnostic as possible.

Open data is not just a buzzword.

The open data movement is for real, and more organizations are trying to find ways to cost-effectively provide data for public consumption. It’s prevalent enough that I’ve given talks on it at data and open source conferences. Several international organizations have arisen to promote open data and aid organizations in becoming more transparent, most notably the Open Data Institute and Open Knowledge Foundation. There is even a conference organized around the topic, the International Open Data Conference (IODC). The size of this event rivals many popularized, special-interest tech conferences and involves not just technologists but serious decision makers and policy writers from around the world. In fact, there has been such acceleration in the movement that the IODC has shifted from being scheduled every 2-3 years to an annual event starting in 2015, and the United Nations recently addressed open data in its Sustainable Development Goals.

So what has open data got to do with data portability? Everything! The main tenants of open data are:

If it can’t be spidered or indexed, it doesn’t exist.

If it isn’t available in open and machine readable format, it can’t engage.

If a legal framework doesn’t allow it to be re-purposed, it doesn’t empower.

See that second tenant? Open and machine readable formats are required for data to be truly open. This means standard formats, preferably something that is highly interoperable (i.e. not intended for a single class of consumer system) and easy for a machine to process without significant human intervention.

Piece of cake, right? Well, it can be if your data is already structured in a simplified, relational manner so it can be queried easily or have exports generated in one or more easily-consumed, standard formats. This means your data can’t have language-, stack-, or storage-specific constructs sprinkled throughout, and undocumented or proprietary formats are totally off the table. If you can’t keep your internal data in an open format, at least make sure it can be transformed into something that meets the needs of the open data community.

In closing

There are many other reasons to make your data portable. Persistence over time, language and stack agnosticism, and open data are just the easy ones to point out, and by far the most commonly encountered problems with application data by any consumer, owner or developer. For those who jump to the bottom for the tl;dr summary:

Data is forever. Make sure that what you are creating can be used in the future without relying on current tools or technology.

Programming languages are not. The language the application was written in that produced this data might not be in common use in the future, so make sure your data is language agnostic.

Architecture evolves. Don’t make stack or framework assumptions in your data as it might some day be migrated to a new architecture that is completely different from what originally produced it.

Open data is not just a buzzword. Make your data clean and well organized, and meeting the ever-growing open data needs of organizations becomes much easier.

By keeping the above considerations in mind when building an application, module, plugin, framework, etcetera ad nauseum, you can have a better handle on making the most of your data and keeping it relevant for more than the life of your application. And it will also keep some developer who hasn’t been born yet from digging through the logs to find out who made the mess they just inherited.